Chosen theme: AI Algorithms and Techniques Explained. Dive into clear explanations, relatable stories, and practical tips that make complex models feel approachable. Join the conversation, share your experiences, and subscribe for weekly breakdowns that turn intimidating AI topics into memorable, actionable knowledge.

Foundations of AI Algorithms

What truly makes an algorithm intelligent

Intelligence emerges when an algorithm continuously reduces error against a meaningful objective using informative data. It is less magic, more measurement. If this clicks for you, subscribe and tell us which objective functions you have wrestled with and finally understood.

A short story about bias and variance

A new analyst once celebrated a 99 percent accuracy on training data, only to fail spectacularly in production. The lesson was harsh yet priceless: complexity memorizes, simplicity generalizes. Share your favorite bias-variance epiphany, and help someone avoid that painful surprise.

Data is the real algorithm

We love shiny models, but it is data quality that decides outcomes. Clean labels, representative samples, and honest splits matter. Comment with your best data-cleaning trick, and we will feature the most creative tip in the next newsletter.

Supervised, Unsupervised, and Reinforcement Learning

Supervised learning pairs inputs with desired outputs, training models to map between them. Think email to spam label, photo to object class. If you have ever tuned a threshold at midnight, share your story and subscribe for our practical evaluation checklist.

Supervised, Unsupervised, and Reinforcement Learning

When labels are unknown, unsupervised methods reveal structure we did not anticipate. Clusters, anomalies, latent factors tell quiet stories about behavior. Post a comment describing the most surprising cluster you have discovered, and how it changed your team’s assumptions.

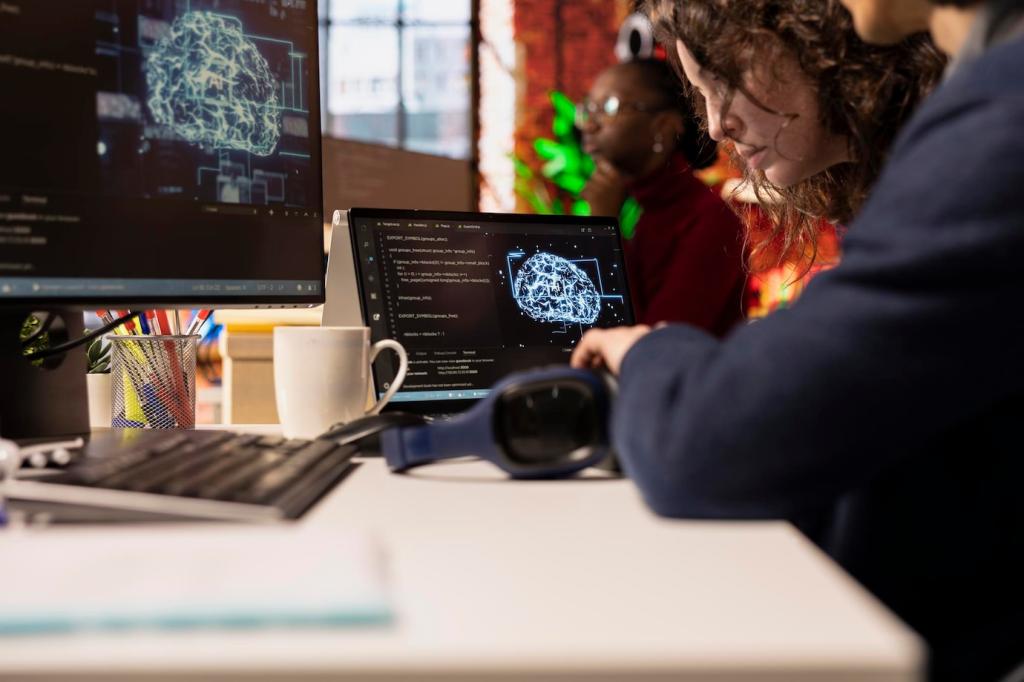

Neural Networks Demystified

Early networks stacked linear pieces with nonlinear activations to model complex functions. Transformers added attention, letting models focus selectively on relevant context. Share which architecture first made sense to you, and we will compile reader favorites with quick visual explanations.

Neural Networks Demystified

ReLUs, GELUs, and friends control how gradients flow and features interact. Choose poorly, and training stalls; choose well, and representations flourish. Comment with your activation go-to and why, and subscribe for our deep dive into their quirks and trade-offs.

Optimization and Training Techniques

Gradient descent and its practical cousins

SGD is sturdy and honest; Adam adapts and accelerates; AdamW decouples weight decay for stability. Real projects often mix tricks. Comment with the moment you switched optimizers and why, and subscribe for our annotated training recipes.

Regularization that actually helps generalization

Dropout, weight decay, data augmentation, and early stopping reduce overfitting without crushing signal. The art is balancing restraint and expressiveness. Share a regularization combo that saved your model from memorizing, and we will test it across different domains.

Schedules, warmups, and the power of patience

Cosine decay, step schedules, and warmups tame the chaos of early training. Small adjustments nudge models toward smoother convergence. Tell us your go-to schedule in the comments, and subscribe for a scheduler visualizer you can tweak in the browser.

Evaluation, Metrics, and Validation

Accuracy can flatter imbalanced problems; AUC, F1, and calibration tell fuller stories. In regression, MAE can be kinder to outliers than MSE. Share a case where switching metrics changed a roadmap, and subscribe for our metric selection cheat sheet.

Evaluation, Metrics, and Validation

A team once validated perfectly, then crashed in production because temporal leakage let future data leak backward. Guard your splits like treasure. Comment with your favorite leakage test, and we will feature robust templates you can adapt immediately.

Evaluation, Metrics, and Validation

Drift erodes performance silently. Track input distributions, output confidence, and real outcomes. Close the loop with feedback. Tell us how you monitor models after launch, and subscribe to receive our lightweight monitoring checklist and notebooks.

This is the heading

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

This is the heading

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

From Prototype to Production

Version data, code, and configuration. Seed randomness, log artifacts, and track experiments. Reproducibility is a kindness to your future self. Share your favorite tool stack, and we will compile a community blueprint for reliable ML operations.

From Prototype to Production

Choose batch, real-time, or streaming based on latency and cost. Validate inputs, guard against timeouts, and plan rollbacks. Tell us your serving architecture, and subscribe for reference designs you can adapt in a day.